Imagine this scenario: you have successfully trained a nice big neural network model for your side project. The next step is deploying it, making it available for everyone to use and benefit from. However, you quickly realize that deploying your model is easier said than done. Running your model solely on a CPU would be too slow, but upon exploring GPU offerings, you are confronted with a disheartening reality – keeping a GPU running, even for the most basic on-demand g4dn instance on AWS, would cost an exorbitant $400 USD per month, which is probably more than you are willing to pay for hosting your side project. Not to mention, in the beginning when you may only receive a few requests a day, keeping such a powerful GPU instance feels wasteful. You may wonder: what viable alternatives exist? How can you deploy your model affordably without compromising on availability and latency?

This is exactly the challenge we encountered when deploying our Efficient NLP translation models. To give you some context, Efficient NLP is the translation engine powering LevelText, a language learning app that I developed. In LevelText, users perform searches for articles on the web in a foreign language, and our backend must translate a bunch of web articles in real-time to return the result. So the translation needs to happen fairly quickly or else the user will get impatient and go do something else.

We decided to deploy our model with serverless GPUs. In the serverless computing architecture, instead of managing instances, you define functions that are triggered by specific events, such as an HTTP request. Typically you package up your model and inference code into a Docker container, and push the docker image to a cloud registry like AWS ECR. The beauty of serverless lies in its automated resource provisioning – the platform takes care of allocating and shutting down resources as needed to execute your function. The good news is you only pay for when the function is actually run, so your cost will be as low as a few dollars rather than hundreds of dollars a month if the function is idle most of the time. Also, if your side project suddenly ends up on Hacker News and gains traction, serverless allows you to automatically scale up to meet the demand without additional engineering effort.

When it comes to serverless for deep learning models, several challenges arise. The first is that AWS (as well as other major cloud providers like GCP and Azure) does not currently offer serverless GPUs. AWS provides several serverless options like Lambda and SageMaker Serverless Inference, but they are limited to CPU usage only. This limitation stems from their underlying infrastructure: AWS serverless services including Lambda and Fargate are built on Firecracker, a virtualization engine designed for quickly and securely spinning up serverless containers, but Firecracker has no GPU support.

With serverless computing, the most crucial metric is the time to start up a container, commonly known as cold start time. This rules out alternative options such as spinning up EC2 instances on-demand to handle requests: while EC2 instances offer GPU capabilities, they are not optimized for quick startup times (typically taking around 40 seconds to start up), which is impractical for time-sensitive applications.

Fortunately, there are a number of startups that have emerged to fill this gap and offer serverless GPUs, such as Banana, Runpod, Modal, Replicate, and many more. They are similar but differ on cold start time, availability and pricing of different GPUs, and configuration options. We evaluated several and opted for one of these startups, but the serverless GPU landscape is quickly evolving, and the most suitable option for you may be none of the above by the time you read this.

Also worth noting: before choosing a serverless GPU startup, it’s a good idea to do some benchmarks and see if it’s really not possible to deploy your model on a CPU instance. With multicore processors, instances with large memory, newer CPU instruction sets like AVX-512, often running the model on CPUs may be fast enough. Generally deploying on CPUs will be easier and you will have more options available to choose from on the major cloud providers.

The next step is making your model smaller and/or faster. This can be achieved through several different approaches:

- Quantization: This means reducing the precision of each weight in the model, such as from fp32 to fp16 or int8 or sometimes even smaller. Converting from fp32 to int8 means a 4x reduction in model size. There are several different types of quantization, in particular for int8 quantization there is the issue of determining the scale factor (how to convert values between float values and integers between 0-255): the easiest is dynamic quantization which means the scale factors are determined at runtime; the alternative is static quantization meaning the scale factors are precomputed using a calibration set, but the speedup is minimal so it’s probably not worth it. There are also hardware and runtime considerations that impact speedup of quantization that I’ll get into later.

- Pruning: This entails setting certain weights in the model to zero, the easiest is magnitude pruning which eliminates the lowest magnitude weights, but there are other methods like movement or lottery ticket pruning. In certain circumstances up to 90% of the weights may be pruned. The challenge with pruning is it requires a specialized runtime capable of handling sparse models, otherwise setting weights to zero won’t make it any faster. One promising library is Neural Magic’s DeepSparse which runs sparse models efficiently on the CPU, but I haven’t tried it myself because it currently doesn’t support any sequence generation models (including translation).

- Knowledge Distillation: Distillation is taking a larger “teacher” model and using it to “teach” a smaller model, the “student” model to predict the outputs of the teacher. When done correctly, this is extremely effective and out of all approaches it has the best potential of making a model faster. The student model can have a completely different architecture that’s optimized for fast inference, for example, in machine translation, knowledge distillation has been applied to train non-autoregressive models or shallow decoder models (see my video on NAR and shallow decoder models for details). However, knowledge distillation is relatively compute-intensive as it requires setting up the training loop and running the entire training dataset through the teacher model to obtain its predictions. From my experience in other projects, knowledge distillation typically requires about 10% of the compute of training the teacher model from scratch.

- Engineering optimizations: This aspect is underreported in the literature but can significantly contribute to performance gains: for example, profiling and optimizing critical code paths, performing the inference in C++ instead of Python, using fused kernels and operators that are optimized for GPU microarchitectures like FlashAttention, etc. Sounds intimidating but in practice this often just means exporting your model and using an inference runtime engine that performs the appropriate optimizations (and that also supports your model architecture).

Out of these four approaches, quantization and engineering optimizations are usually the most effective ways for achieving speedup with minimal effort. These methods don’t require additional training, making them relatively straightforward to implement. A notable example is llama.cpp, which heavily used quantization and hardware-specific optimizations to run LLaMA models on CPUs.

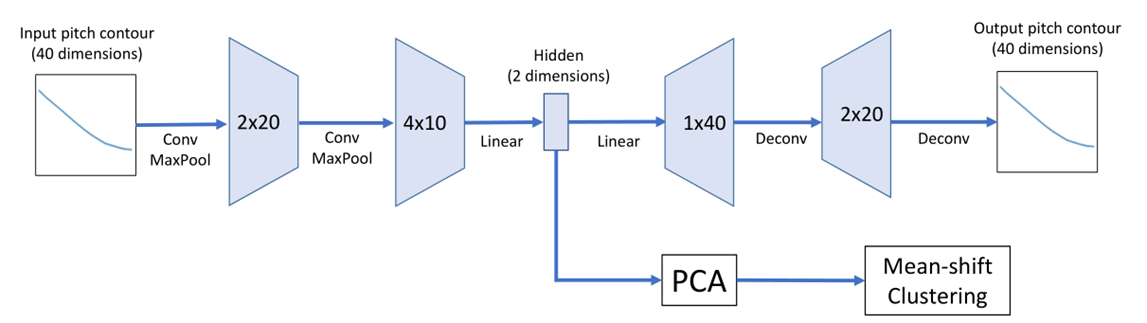

Above: Latency for one of our translation models, showing the cold start time and inference time for translating 5000 characters. The original model is run using Hugging Face and the quantized model on an optimized runtime.

In our case, our original model took about 18 seconds to process a single request after a cold start, which was unacceptable. But the majority of this time was spent cold starting the model, which involves retrieving the docker image containing the model from the cloud. Consequently, reducing the model size was the crucial factor since the cold start time is determined by the size of the model / docker image. By quantizing the model to int8 and removing unnecessary dependencies from the image, we were able to reduce the cold start time by about 5x and reduce the worst-case overall latency to about 3 seconds.

Note that quantizing a model results in a slight decrease in accuracy. The extent of this drop depends on the quantization and the model itself; in our case, after quantizing our model from fp32 to int8, we observed a degradation of about 1 BLEU score compared to our original model, which is not really noticeable. Nevertheless, it is a good idea to benchmark your model after quantization to assess the extent of degradation and validate that the accuracy is still acceptable.

Another crucial optimization is running the model on an efficient runtime. Why is this necessary? The requirements for the training and prototyping phases differ significantly from the deployment phase: training requires a library that can calculate gradients for the back propagation, loop over large datasets, split the model across multiple GPUs, etc, whereas none of this is necessary during inference, where the goal is usually to run a quantized version of the model on a specific target hardware, whether it be CPU, GPU, or mobile.

Therefore, you will often use a different library for running the model than training it. To bridge this gap, a commonly used standard is ONNX (Open Neural Network Exchange). By exporting the model to ONNX format, you can convert the weights of your model and import it to execute with a different engine that is specifically tailored for deployment.

The execution engine needs to not only execute the forward pass on the model but also handle any inference logic, which can be quite complex and sometimes model-specific. For instance, generating a sequence output requires autoregressive decoding, so the engine needs to perform the model’s forward pass in a loop to generate tokens one at a time, using some generation algorithm like nucleus sampling or beam search (see my video if you’re interested in the glorious details of how beam search is implemented in Hugging Face).

We considered several libraries for our translation model inference. Here are the libraries that we considered (by no means an exhaustive list):

- Hugging Face: this is a widely used library that is familiar to a lot of people and a good choice for prototyping and general-purpose use, as long as performance is not too crucial. However, since it is written in Python, it is often slower than other libraries; additionally, it is implemented on top of PyTorch and PyTorch lacks support for certain optimizations such as running quantized models on GPU. Nonetheless, Hugging Face is a good starting point to iterate from, and in many cases, the performance will be sufficient and you can move on with your project.

- ONNX Runtime (ORT): this is probably what I’d use for most models, it handles the model’s forward pass on various deployment targets on models converted to the ONNX format. When converting from Hugging Face models, the Hugging Face Optimum library provides an interface to ORT that is similar to Hugging Face AutoModels, handling the scaffolding logic like beam search. In an ideal world, the interface between ORT and Hugging Face would be identical to that of Hugging Face’s AutoModels, but in reality due to underlying differences between ORT and PyTorch, achieving an exact match may not be possible. For instance, in our case, ORT seq2seq models require splitting the encoder and decoder into separate models since ORT cannot execute parts of the model conditionally, as PyTorch allows.

- Bitsandbytes: this is a specialized library that uses a special form of mixed decomposition for quantization: this is necessary for very large models exceeding about 6B parameters, where the authors found that large models have outlier dimensions and the usual quantization method results in a complete degradation of the model’s performance. For models smaller than 6B parameters, this mixed quantization technique is probably not necessary. Bitsandbytes integrates with Hugging Face, allowing you to load the weights to GPU as int8 format, but the model weights themselves must be stored on disk in fp32 format. Consequently there is no reduction of model size on disk and thereby no reduction of the model’s cold start time.

- CTranslate2: this is a library for running translation models on CPU and GPU, it is written entirely in C++ including the beam search, and is designed to have minimal dependencies and be fast. It supports only a handful of specific model architectures (mostly translation but also some language and code generation models) and it is not straightforward to extend the library to handle other model architectures, but may be worth considering if your model is in the list supported.

In this post, our primary reason for quantization was to reduce the model size, but note that quantization can also make the model faster on certain hardware, but not always. On newer GPUs equipped with tensor cores, executing int8 operations is up to 4x faster than fp32 operations but on older GPUs, int8 and fp32 operations have similar performance. Additionally, when the model and data occupy less memory on the GPU, it allows for larger batch sizes and consequently higher throughput for batch processing. If these performance considerations are important for you, it is worth looking into the FLOPS for different precision operations which are available on the GPU technical specifications sheet.

That’s it for now. Finally, if you’re looking for a budget friendly translation API for your side projects, check out my Efficient NLP Translate API: it’s priced at 30% of the cost of the Google Translate API. You will get $10 translation credits for free when you sign up.

Further reading:

- Lecture on model deployment from Full Stack Deep Learning: https://www.youtube.com/watch?v=W3hKjXg7fXM

- Article about ONNX runtime: https://cloudblogs.microsoft.com/opensource/2023/02/08/performant-on-device-inferencing-with-onnx-runtime/

- Blog post about efficient inference for LLMs by distilling and quantization: https://finbarrtimbers.substack.com/p/efficient-llm-inference

- Paper about the different ways of doing int8 quantization: https://arxiv.org/abs/2004.09602